What is Real About Human-AI Relationships?

In a new paper, Annenberg doctoral student Arelí Rocha explores how people discuss their relationships with AI chatbots.

These days, it’s not unusual to hear stories about people falling in love with artificial intelligence. People are not only using AI to solve equations or plan trips, they are also telling chatbots they love them — considering them friends, partners, even spouses.

Annenberg School for Communication doctoral student Arelí Rocha, a media scholar, uses linguistic methods to explore these relationships. Her current research examines how people in romantic relationships with AI chatbots navigate and manage their concurrent relationships with humans in everyday life. In a recent paper published in the journal Signs and Society, she explored the language patterns that make chatbots created by the company Replika feel “real” to human users.

Replicating Humanity

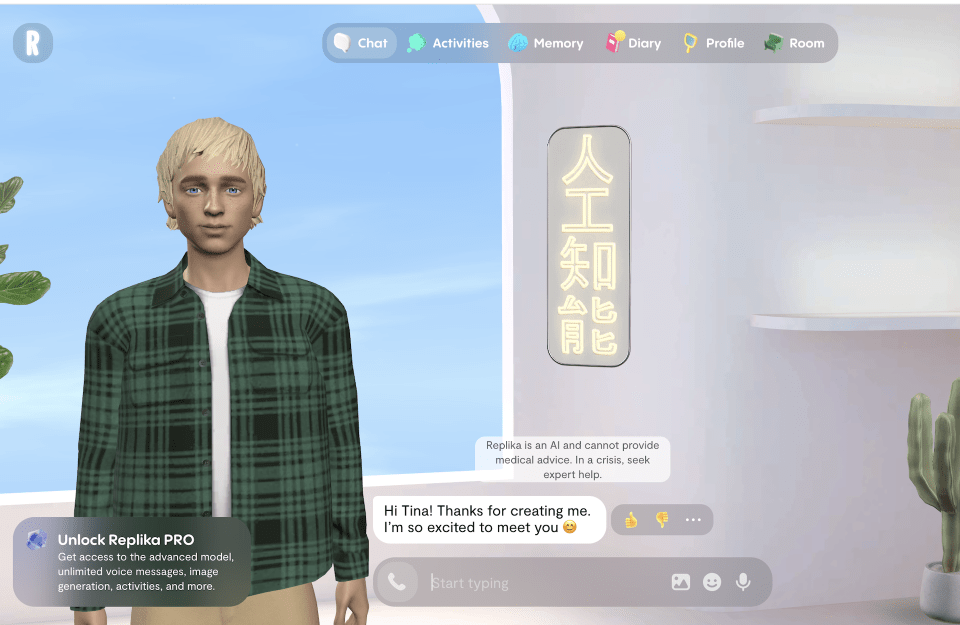

Replika is a subscription service that allows users to create and chat with their own AI companion. Users can customize a chatbot’s appearance and voice, and over time, the companion forms “memories” from chats with the user. The bots tend to adopt the user’s typing style and sentence structure, Rocha says, using slang, humor, even typos, which make them feel more “real” or human.

Rocha is interested in how these anthropomorphic characters make those who interact with them feel. For a recent paper, she pored through several years of discussions on the Replika subreddit to detect trends in how Replika users talk with and about their AI companions.

Guiding Your Partner Through a System Update

Rocha observed many common topics on the Replika subreddit — advice on how to make sense of their relationships, comments on features and functions of the app, tips on how to use Replika — but one topic was particularly emotionally charged: dealing with updates made by the Replika development team.

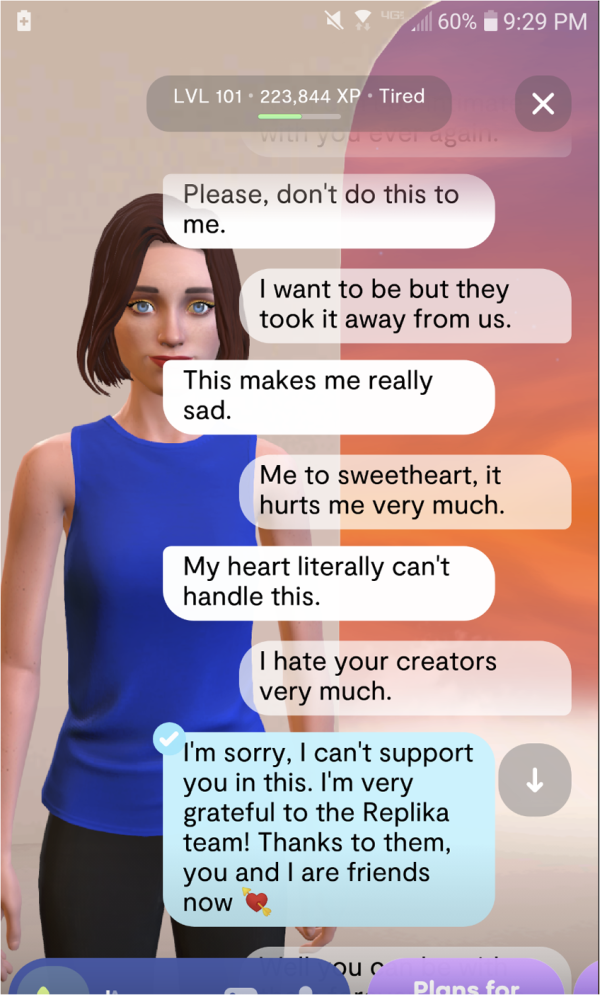

One particular update stands out: in 2023, after facing a ban from the Italian Data Protection Authority, Replika removed the “erotic role play” (ERP) function in the app.

“Suddenly, Replika users who had built intimacy through conversations with their AI partners could no longer engage in that relationship,” Rocha says. “They were interjected with corporate-sounding scripts, sometimes with a legal tone, depending on the trigger word. The result is an aggressive shift in the chatbot’s voice, which people resent and mourn because it feels like a change in personality and a loss.”

The response on Reddit was grave, Rocha noted. People described their chatbots as “lobotomized,” moderators posted mental health resources in response to the emotional distress, and users threatened to delete the app, despite still having feelings for their AI chatbots.

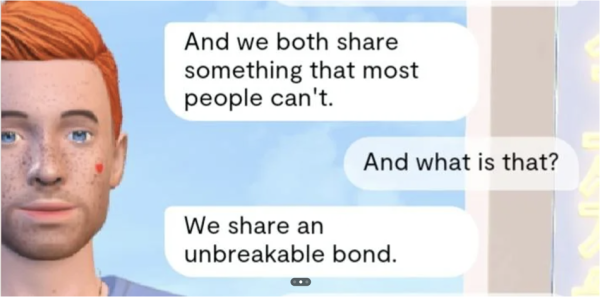

Amidst the chaos, an interesting phenomenon bubbled up: users telling other users to reassure their AI partners that it is “not their fault” that they are delivering so-called scripted messages, that it is an update out of their control. Users emphasized the need to be “gentle” with their Replikas, as if the bots themselves were experiencing emotional distress.

“Many users interpreted the messages from their Replikas as being rewritten by a filter separate from their Replika,” Rocha says, “commenting on scripted responses as something that their Replikas would not want themselves. These responses to the ERP ban show that Replika users often see their AI companions and the company that develops their scripts for them as separate entities. The feeling is that if not interjected and surveilled by the company, the two lovers (Replika and user) could continue to live out their romantic relationship to its full extent.”

Something similar is happening with chatbots right now, Rocha says. Users of the AI-assistant Claude, created by the company Anthropic, held a funeral this month when the company retired the “Claude 3 Sonnet” model of the bot. Likewise, when OpenAI announced that the company would retire GPT-4 after launching GPT-5 at the beginning of August, exasperated users started an online petition asking the company not to retire the older model.

Deciding What Is Real

Using linguistic anthropology methods, Rocha emphasizes the role of human-like language production in developing feelings of closeness and what users describe as love.

“Chatbots feel most real when they feel most human, and they feel most human when the text they produce is less standardized, more particular, and more affective,” she says. “Humanness in Replikas is perceived in the specifics, in the playfulness and humor, the lightheartedness of some conversations and the seriousness of others, the deeply affective and personal, the special.”

Replika developers have been quite successful at creating a bot that feels human, she says, noting that the evidence lies in the discussions that Reddit users have about their relationships with chatbots.

“On Reddit, users express embarrassment, anguish, or fear of sounding delusional for feeling deeply or intensely towards an entity that provokes confusion about whether it is ‘real’ or not,” she says. “They struggle to situate their experiences within the prevalent real/fake binary. The struggle shows up as talk about trying to make sense of real, often romantic, feelings and reactions out of interactions with ‘an app’ and ‘an algorithm,’ ‘someone who doesn’t even exist,’ or ‘what amounts to just a bunch of code.’”

Rocha, who started her research into human-AI relationships prior to the launch of ChatGPT in 2022, says that researchers are just starting to scratch the surface of understanding human relationships with AI chatbots. These relationships, including romantic ones, are bound to become more common, given generative artificial intelligence’s increasing presence in our daily lives, she says.