A New Class Has Students Use AI To Do Their Homework

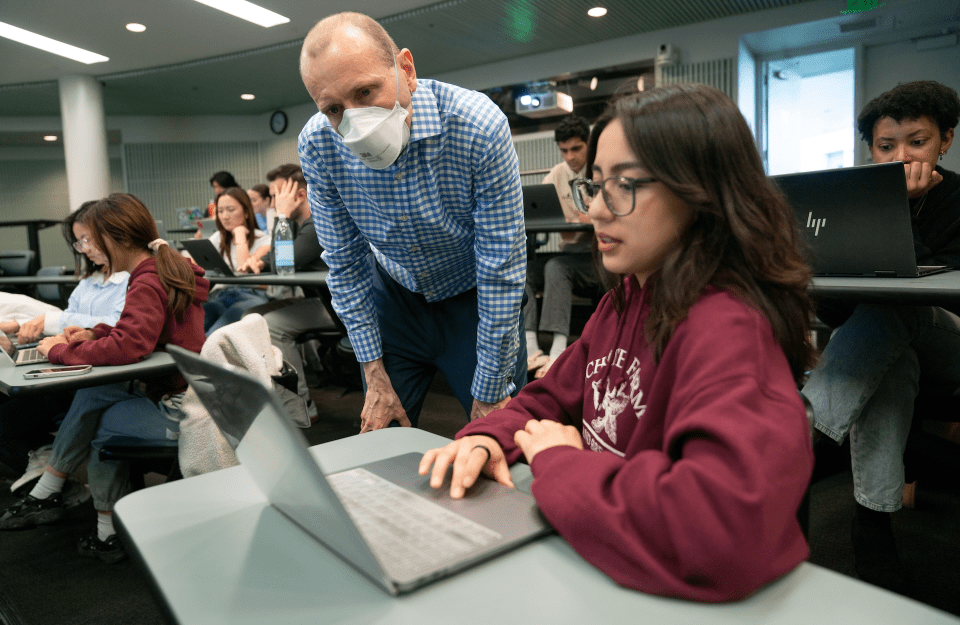

Lecturer Matt O’Donnell’s course “Talking with AI: Computational and Communication Approaches” encourages undergraduates to play with AI.

O'Donnell helps Communication major Nancy Miranda during class. Students each kept a blog during the class, chronicling what they did with AI and what they learned.

Increasingly, artificial intelligence (AI) is able to generate content with astonishing human veracity. ChatGPT can write emails that sound like any real office memo. Web apps can create the perfect headshot without any posing whatsoever. AI-generated images regularly fool people to believe they are watching explicit private videos.

In lecturer Matthew Brook O’Donnell’s new course at the Annenberg School for Communication at the University of Pennsylvania, undergraduates are testing the boundaries of this emerging world of AI tools, exploring their ability to create accurate, trustworthy, and coherent content for research, media, and everyday life.

In the course, students examine large language models (LLMs) and use programming to “look under the hood” of generative AI tools and practice. The course emphasizes the human-technology partnership — using LLMs as collaborators to enhance their own human thinking. The students also spend significant time engaging in human-like conversation with LLMs to understand what these models can and cannot do well.

O’Donnell — who has a background in communication, computational linguistics, and data science — wants students to not only understand how generative AI tools work, but also to question the ways in which humans communicate with these tools and how humans will use generative AI in the future.

“The dominant voices in the generative AI space are those with highly technical backgrounds,” he says, “but there’s room for a humanistic and social science viewpoint.”

Health Communication Research

Step into the class — COMM 4190: Talking with AI: Computational and Communication Approaches — and you’d recently have found groups of students writing and rewriting prompts for AI assistants, attempting to get these tools to create public health campaigns. It’s surprisingly difficult.

One pair aims to promote the health benefits of eating insects. They have been using text-to-image generator DALL·E to produce a slew of promotional posters. Many come out covered in incomprehensible text.

“DALL·E is still very inconsistent with language,” says sophomore Communication major Sean McKeown, co-creator of the campaign. “Sometimes the text makes sense and other times it’s pure gibberish. It’s hard to know which prompt will give you which.”

Other teams of students have been having similar problems, but also breakthroughs.

After lines and lines of prompts, a group working on an anti-drunk-driving campaign has success guiding Microsoft Copilot toward creating an image with the tone they are seeking.

These campaigns are inspired by the work of Professor Andy Tan, Director of the Health Communication & Equity Lab at Annenberg, whose study Project RESIST is dedicated to creating anti-vaping campaigns aimed at LGBTQ young women.

Tan hasn’t used AI in his own research and is interested in how the students’ work will compare to health campaigns created the conventional way.

Playing with AI

One element of the students’ assignments is to simply experiment with AI and keep blogs about their progress.

"The idea of the blogs is for the students to try out different ideas and experiments using LLMs and then to write those up," O'Donnell says. "However, there is no restriction on them not using an LLM to write some of the text. We are going to try an experiment to see if given the 16 blogs written by each student, an LLM could generate a new one in their style."

Their experiments have included asking advice on how to comfort a friend with an unrequited crush, sussing out whether AI can understand Jean-Paul Sartre, creating a storyline for a new season of the television show "The White Lotus," and testing AI’s ability to solve riddles.

For one of her experiments, sophomore Emmy Keogh decided to play a game of broken telephone with an AI tool, curious to know how much an image would change after a few iterations of generating an image, turning the image into text, and using this text to create a new photo.

After four iterations, the image morphed from the original prompt of “a selfie of a dog wearing sunglasses, an upset cat, and a horse who is just happy to be there” to an image of a hand drawing three dogs, a cat, a horse, and a person.

Senior Leesa Quinlan ran into the limits of ChatGPT when she attempted to get the tool to write a 200-word love story. It was only after seven tries that the AI produced something close to Quinlan’s request — a 199-word story. She’s not sure what prevented it from adding an additional word.

“It just goes to show that even some of the tasks that we think ChatGPT might be best suited for are still difficult,” she wrote.

In order to play with AI’s image generating ability, she had DALL·E create images of the recent solar eclipse as seen from cities that were in the path in totality and those that were not.

There were some hiccups — Atlanta was not in the path of totality, but initially DALL·E showed it was, for one — but after playing with prompts for a while, she received “good enough” results.

Junior Kendall Allen decided to explore gender bias in AI and see if ChatGPT addressed her differently if she told the AI she was a boy or girl. It did, using different examples to explain topics like algebra and addition. For the latter, it used cookies for girls and toy cars for boys.

Human Communication

Sophomore Jason Saito says that he appreciates the way that O’Donnell’s course provides context on how AI affects society as a whole. As a coder, he’s used to hearing about AI’s effect on technology, but not about its impact on communication.

Through experimentation, students have gathered that generative AI tools are not as good at communicating as humans are — for now.

“We effortlessly manage conversation in ways that current LLMs completely fail at,” O’Donnell says. “I hope students come away from the course with a better appreciation of their own amazing human intelligence, particularly when it comes to using language in a range of social contexts to build relationships, develop knowledge and solve problems."